|

Advertisements

|

||

|

What is FlexMonkey?

FlexMonkey is an open source tool for testing Flex and AIR applications that is used by both software developers and quality assurance professionals. The open source project includes an AIR-based console that allows users to quickly create and run functional tests by providing the ability to record, playback, and verify an application’s state at any given point. FlexMonkey also allows users to generate FlexUnit / ActionScript versions of the tests created in the AIR console for easily setting up automated runs in continuous integration environments.

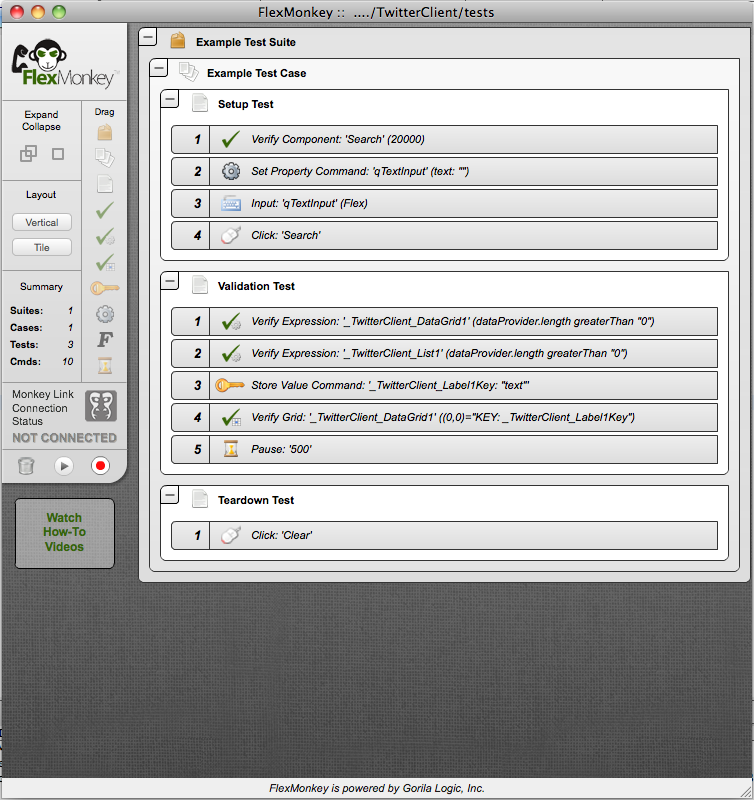

The latest version of FlexMonkey has undergone major changes that existing users will notice right away, as the AIR console has been completely rewritten (see Figure 1). Beyond the user interface updates, a major clean-up to the internals has been made to improve the overall quality of the tool and to fully integrate FlexMonkey with the latest release of FlexUnit 4. FlexUnit allows FlexMonkey to leverage the latest testing features for code generation and gives FlexMonkey a rock-solid runner architecture for the FlexMonkey Console. Overall, these updates make it easier to create tests and make them more robust when playing them back.

Figure 1. New FlexMonkey AIR Console

Functional Testing versus Unit Testing

Before we learn more about how you can use FlexMonkey to implement your Flex tests, let’s spend a few moments discussing software testing theory. Traditionally, functional tests are created, managed, and run by quality assurance professionals who are not responsible for coding the implementation, as opposed to unit tests, which are the responsibility of the developers who are writing the code.

Functional tests treat the application as a black box and strive to verify that the application works as intended for the end users. This process can be automated or manual and involves actually interacting with the application as an end user would (e.g. clicking, typing, etc.). Whether the tests are automated or not, the steps in these test cases can be captured in plain English and understood by non-developers involved in the project.

Unit tests differ from functional tests in that they have intimate knowledge of the code and are best implemented and understood by the developers writing the code. Because they deal with self contained “units,” they are very good at isolating problems when they arise. Unit tests excel at verifying that the business logic portions of the code are producing the correct results. In addition, they are key to protecting the implementation as the code base changes over time.

When it comes to user interface testing the unit testing paradigm can quickly break down, as it is difficult to split user interface code into testable units without strict coding standards and extensive knowledge of both the Flex platform and the testing tools. Even when those conditions exist, trying to fully test a Flex application through unit tests often becomes awkward and cumbersome. Thus, FlexMonkey strives to offer developers a way to create developer level functional tests for their Flex and AIR application.

Developer Testing versus Quality Assurance Testing

Due to the unique challenges of testing user interfaces, we’ve taken to discussing Flex testing goals in terms of the team member’s role before diving into the method of testing, with the most common being the differentiation between developer testing activities and QA testing activities. While the goals may differ slightly, FlexMonkey is well suited for use by both roles, and has many existing users in each camp. In this section, the focus will be more on using it as a developer testing tool, as it is already common to use functional testing tools for QA testing.

There is nuance in every development team’s testing goals. Our primary goal when creating a developer test harness is to protect against regression and ensure that the entire development team is able to refactor and change things without a complete understanding of every line of code in the system. As many of us have experienced too many times, it is scary to change code in a legacy code base that does not have proper testing. Using FlexMonkey to create a developer set of functional tests is a powerful way to alleviate this pain when building Flex and AIR applications.

When asserting that FlexMonkey should play a major role in developer testing of Flex applications, a common question asked is, “Should I use FlexMonkey to do all my Flex testing?” The short answer to this question is “No.” As we discussed in the previous section, unit testing and functional testing have different benefits and limitations. Unlike unit testing, functional testing does not provide much help in isolating where the problem exists, but instead allows you to have broad coverage and instant knowledge that something in the system has been broken from the end user's perspective.

When working with an active Flex development project where there is an opportunity to write the code with a testable approach, the ideal testing solution is to create a mix of unit tests, with FlexUnit, and functional developer tests, with FlexMonkey. In this case, we utilize FlexUnit for traditional business logic and controller type classes (i.e., controllers in the commonly used model-view-controller pattern) and FlexMonkey to get a wide breadth of validation coverage on how the application actually works for the end users.

On the typical greenfield application development project that takes this approach, we prefer to split the testing work between FlexMonkey and FlexUnit, with about 75% of the testing effort accomplished through functional developer tests created with FlexMonkey. However, on a legacy code base, it often can be much simpler and cheaper to do the majority of the testing with FlexMonkey, as success in building a test suite is far less dependant on how the code has been developed. It’s important to also note that with both tools tests can be fully automated to run in all the common CI environments (Hudson / Jenkins, Cruise Control, Team City, etc.). FlexMonkey actually generates FlexUnit code for these purposes, so in a CI environment all tests are run as FlexUnit tests.

Hopefully, that gives you an understanding of how to best use FlexMonkey to achieve your testing goals.

Using FlexMonkey

This section walks you through the basics of using FlexMonkey.

Basic Setup

Getting up and running with FlexMonkey is quite straightforward. The first time you launch FlexMonkey it will prompt you for a directory to store the project files (you typically want to use a “tests” directory in the root of your Flash Builder project). In this directory, FlexMonkey will store XML files that include the project configuration and the data for tests, and a snapshot directory for any bitmap comparison files.

Once you have configured the project directory, you will need to configure a Flex or AIR project for testing. This includes adding a SWC library and some compiler arguments to your project setup. The version of the SWC library and arguments vary depending on your Flex SDK version and whether or not you are using Flex or AIR. So, follow the “Setup Guide” on the Project > Properties screen of FlexMonkey to configure your project.

FlexMonkey does have a dependency on the Adobe Automation Framework, which is licensed as part of Flash Builder Premium. Even if you do not have Flash Builder Premium, you can still try out FlexMonkey, but the automation framework will throw an error if you attempt to do too many interactions in a single test run (the limit is in the 15-30 range).

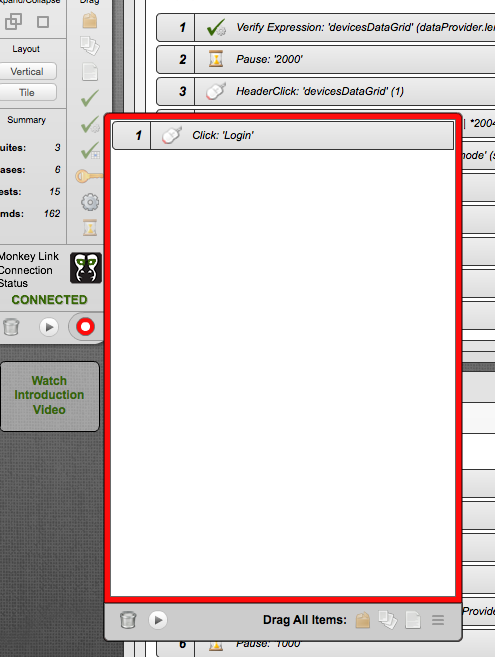

Once you have successfully configured FlexMonkey with your application, you should be able to to run your application as you usually would (i.e in the browser or through launching the AIR application) and see the FlexMonkey console changed from “Not Connected” (see Figure 2) to “Connected” (see Figure 3).

|

Figure 2. Not Connected |

Figure 3. Connected |

Recording and Instrumenting

Now that FlexMonkey is set up, it’s time to look at how you use it to record interactions with your Flex and AIR applications. To begin recording, click on the record button at the bottom of the left navigation panel. This will open a recording buffer dialog, as seen in Figure 4.

Figure 4. FlexMonkey Record Dialog

Once the recording dialog is open, all you need to do is interact with your application to capture the user-generated events. The captured events will appear in the recording buffer dialog. When you are done interacting with the application, you can stop the recording session and then drag the recorded events from the recording buffer dialog into the test items. The recorded events can be added to existing Suites, Cases, or Tests as individual items, or dragged in as brand new Suites, Cases, or Tests.

Test Constructs

Now, that you understand how to capture interactions with your application. Let’s take a look at the different things that make up FlexMonkey tests. An overview of the items follows:

Suites

Suites Cases

Cases Tests

Tests User Event Command

User Event Command Component Verification

Component Verification Expression Verification

Expression Verification DataGrid Cell Verification

DataGrid Cell Verification Set Property Command

Set Property Command Store Value Command

Store Value Command Call Function Command

Call Function Command Pause:

Pause:

Similar to many other testing tools, FlexMonkey maintains the typical Suite, Case, Test hierarchy. The Test Suites are the top level grouping and hold Test Cases.

Test Cases are a grouping of a test fixtures (i.e., a set of test items that run in order from a known state of the application).

Tests hold the actual test elements. This includes items you’ve recorded and the other test constructs described here.

The User Event Commands are the items captured during recording.

Component Verifications are used when you want to verify that one or more properties directly on a Flex component are equal to a value.

Expression Verifications are used to check if the properties on a component are equal, greater than, less than, or contains a value. This type of verification supports dot notation for validating child properties (e.g., dataProvider.length > 0).

DataGrid Cell Verifications are used to validate the value of a cell in a Flex DataGrid or AdvancedDataGrid.

Set Property Command allows you to change the value of child properties of a Flex component at runtime. Similar to the Expression Verification, you can use dot notation to traverse the tree of objects.

The Store Value Command allows you to capture a property value at a given point in time for use later, either in the Set Property Command or any of the Verifications.

The Call Function Command allows you to call functions inside your code. You select the user interface component that has the function and then provide FlexMonkey with the function name to call (note: it must be public and take no arguments).

Pauses allow you to tell FlexMonkey to wait for a specified period of time. They are convenient to use, but often can make test suites brittle, so you typically want to use the “Retry” properties of each command to deal with asynchronous timing.

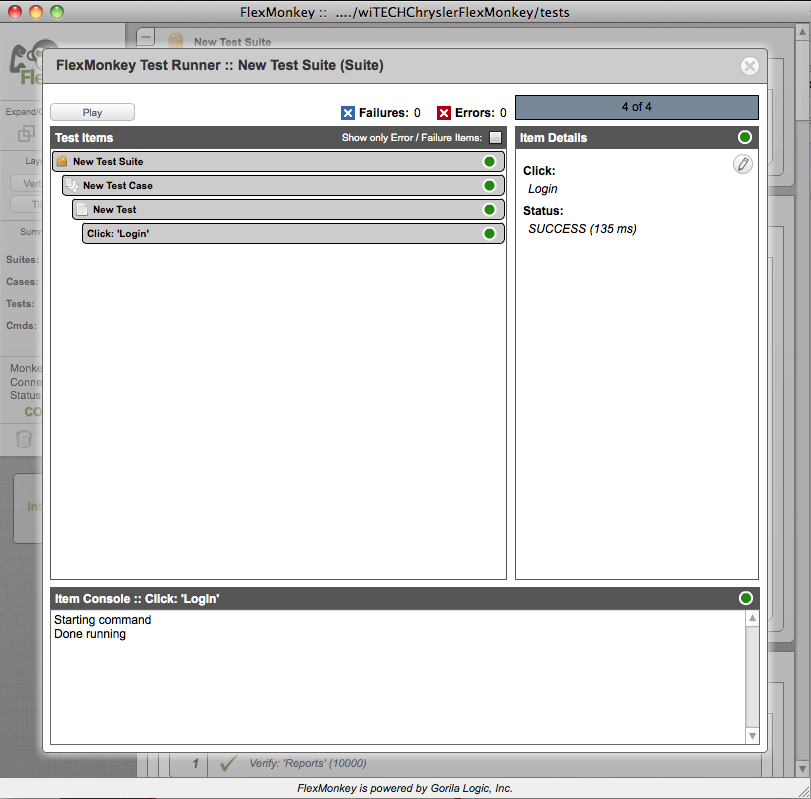

Running tests in the console

Once you have test items, you can play them by clicking the play button on the individual items, Suites, Cases, Tests, or the master play button on the left navigation to play the entire set of tests. Once you click on play, the runner user interface will overlay in the FlexMonkey console and begin playing (see Figure 5). While the test runner is playing test items, you will simultaneously see the events being executed in your application, just as if you were interacting with the application directly.

When creating test items for playback, it is important to think through flows that will be re-playable. You want to avoid having to restart the application after automation events have changed the state of the application. So, you typically want to use the convention of creating tear-down items at the end of each body of work to return the application to a known state (similar to the approach followed when creating unit tests). The typical way to structure FlexMonkey tests is to use recorded events to take the application into a state you desire to test, perform verification / assertions, and then tear down the test to return the application to its known base state.

Figure 5. FlexMonkey Test Runner

Conclusion

This article gave you an understanding of the basics of using FlexMonkey for testing your Flex applications in the browser and on desktop (Adobe AIR). For additional information on FlexMonkey see the resources section.

Resources

This article describes the functionality of the very latest version of FlexMonkey, which is currently in a beta release status. You can find it:

http://www.gorillalogic.com/flexmonkey/beta

Flex.org

http://www.flex.org

Flex 4 Fun by Chet Haase

http://www.artima.com/shop/flex_4_fun

FlexUnit.org

http://www.flexunit.org

Intro to FlexMonkey - Open Source Automated Testing for Flex Apps, an educational video by James Ward:

Talk back!

Have an opinion? Be the first to post a comment about this article.

About the authors

Victor Szalvay is CTO of Danube Technologies, Inc., and product owner of Scrum Works Pro.

James Ward is a Technical Evangelist for Flex at Adobe and Adobe’s JCP representative to JSR 286, 299, and 301. Much like his love for climbing mountains, he enjoys programming because it provides endless new discoveries, elegant workarounds, summits, and valleys. His adventures in climbing have taken him many places. Likewise, technology has brought him many adventures, including: Pascal and Assembly back in the early ’90s; Perl, HTML, and JavaScript in the mid ’90s; then Java and many of its frameworks beginning in the late ’90s. Today he primarily uses Flex to build beautiful front-ends for Java based back-ends. Prior to Adobe, James built a rich marketing and customer service portal for Pillar Data Systems. You can read his blog at http://www.jamesward.com/.

Artima provides consulting and training services to help you make the most of Scala, reactive

and functional programming, enterprise systems, big data, and testing.

2070 N Broadway Unit 305

Walnut Creek CA 94597

USA

(925) 918-1769 (Phone)