As I write this, I'm trying to install some popular diagnostic tools, downloaded from a popular computer manufacturer, onto my laptop, using a popular install program. The install has been using 100% of a CPU for half an hour. That's 4,503,273,209,800 clock cycles so far, time enough for 1,258,920,345,600 memory accesses and 180,000 random disk accesses. Far more computing resources than were needed to first put humans on the moon.

What in the name of Turing is the install doing with those resources? I have no idea. It refuses to be sampled by the activity monitor. It says it's searching my hard drive, but hard drive access is sporadic, and it could have traversed my hard drive many times by now. And when asked to quit it emits a scary message about possibly leaving behind an "incomplete set of software" and encourages me to Resume. So I Quit, and will wait for my machine to cool down enough to not scald my lap before I try again.

And I'm not singling out this install. My experience has long been that my new computers keep getting exponentially more powerful, thanks to Moore's Imperative, but many of my new programs don't get any faster at all, and some are unbelievably slow. Molasses-in-January slow. Sedated-sloth slow. Anaesthesized-slug slow.

So how do we manage to waste such abundant resources? We have our ways.

Complexity

"You could look it up." -- Yogi Berra

The computer science literature likes to think of computational complexity less than exponential as "tractable." If you have a big enough tractor maybe so, but in most work anything worse than near-linear is unacceptable. So how do we wind up with quadratic, and worse, performance?

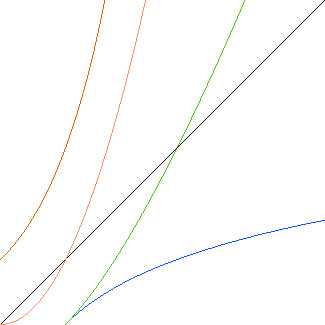

Sometimes, as for most multivariate statistics, there are no better algorithms. Matrix inversion is cubic, singular value decomposition is quadratic, and that's that. But when we have a choice, it matters. This is easily seen by graphing e**x (red), x**2 (orange), x*ln(x) (green), x (black) and ln(x) (blue):

[Note: one graphing calculator program hung and another crashed in the course of the few minutes I spent creating this graph. Both are Java programs.]

On this graph x and y run from 0 to 5. As x gets bigger y=e**x and y=x**2 rise ever more quickly, y=x*ln(x) approaches a straight line, y=x is a straight line, and y=ln(x) approaches a constant value. Obviously, as x gets ever larger the near-linear, linear, and near-constant algorithms become ever more vastly preferable to super-linear ones.

[Note: I got the notions of O(n log n) as near-linear and O(log n) as near-constant from Sean Parent.]

So consider the common problem of sorting an array. The venerable bubble sort is easy to code, and is the kind of algorithm you might reinvent if you didn't know better:

template<typename iter>

void bubblesort(iter first, iter last) {

if (last - first <= 1)

return;

bool swapped;

do {

swapped = false;

--last;

for (iter p = first; p != last; ++p)

if (p[0] > p[1]) {

swap(p[0], p[1]);

swapped = true;

}

} while (swapped);

}

So what's the problem? On average this routine takes quadratic time -- the number of operations is proportional to n**2.

Now this is a textbook example, but in general it is easy to go super-linear without knowing. Just invoke a library routine or system call N times that is, unbeknownst to you, O(N), and there you have it. For example, the search algorithms in the C+ Standard are O(log(N)) when used with random-access iterators, but O(N) when used with input iterators. Or (re-)invent an algorithm of non-obvious complexity, or implement a published algorithm of undocumented complexity, and expect to be surprised. Worse, you won't notice any problem with your fast development machines and small test cases, but your customers will run more data on slower machines and hit the wall.

How to do better than bubblesort? Perhaps use Hoare's Quicksort algorithm, which has near-linear O(n log n) complexity on average:

template<typename iter>

void quicksort (iter first, iter last)

{

if (last - first <= 1)

return;

iter p = first, q = last;

--q;

iter pivot = p + (q - p) / 2;

swap(*pivot, *q);

iter mid = p;

for (; p != q; ++p) {

if (!(*p < *q)) continue;

swap(*p, *mid);

++mid;

}

swap(*q, *mid);

quicksort(first, mid);

quicksort(mid + 1, last);

}

So how do we know which sort is faster? Maybe by just looking, or by testing, or by formal big-O analysis.

Or you could look it up.

void sort(RandomAccessIterator first, RandomAccessIterator last);

... Sort all elements in the range [first, last)... sort() guarantees a good performance (n-log-n) on average. However, if avoiding worst case performance is important, you should use partial_sort() or stable_sort() ...

-- Josuttis 1999

Thanks to the Standard Library you often don't have to get your algorithms right, you just have to get the right algorithms.

Looping

"It's like deja vu all over again." -- Yogi Berra

If you want to redline your CPU to no purpose, try this:

loop:

goto loop;

Silly, eh?

But there are lots of less obvious ways to loop while accomplishing little or nothing, all of them leading to a runaway program.

Looping also amounts to How To Run Hot, where heat means wasted energy and fried equipment, and cooling also wastes a lot of expensive energy.

Spinning

"There must be some way out of here." -- Bob Dylan

One common way to loop infinite is called spinning or busy waiting. It typically shows up in logic like this:

loop:

if (!data_available)

goto loop;

get_data();

If you know that the data will be available soon it works fine, but if not it's just a waste. One easy fix is to sleep while waiting, so as to yield the CPU to another thread:

loop:

if (!data_available) {

sleep(timeout);

goto loop;

}

get_data();

You still may never get out of the loop, but at least you won't be hogging a CPU the whole time.

Hanging

"I've been where you're hanging, I think I can see how you're pinned..." -- Leonard Cohen

Consider:

f(x);

What if f() never returns? Perhaps it is waiting on keyboard input, or an internet connection, or a hard drive access, or a semaphore, or some other event that may never occur. At worst, you can leave it to the user to kill the hung program. Better, f() will use a timeout to avoid waiting forever. Or, failing that, you can run the loop in a separate thread or process that can be killed automatically when it appears to hang. In some cases you can use an asynchronous I/O package like Boost.asio to avoid ever waiting at all.

One of the easiest ways to hang is to deadlock via the misuse of mutual-exclusion primitives that control access to shared resources by multiple threads or processes. The classic deadly embrace looks like this:

// Thread 1: // Thread 2: acquire(U); acquire(V); acquire(V); acquire(U); . . . . . .

Note that these threads do not acquire their resources in the same order. As a result, Thread 1 can acquire U at the same time as Thread 2 is acquiring V, and then neither thread can make progress as Thread 1 is waiting on V, which Thread 2 holds, and Thread 2 is waiting for U, which Thread 1 holds.

This may seem obvious, but is easy enough to do, especially as your patterns of resource acquisition get more subtle and complex. The answer here is to stick as much as possible with higher-level idioms -- e.g. asynchronous I/O, two-phase locking, message passing, map-reduce -- that enforce safe use of the dangerous primitives.

Thrashing

"Hit me again, harder." -- Cygwin error message

Consider some code that sums a square array:

for (row = 0; row < N;, ++row)

for (col = 0; col < N; ++col)

sum += A[row][col];

Or you can do it the other way round:

for (col = 0; col < N; ++col)

for (row = 0; row < N; ++row)

sum += A[row][col];

So does it matter? Indeed it does!

In C++ arrays are stored row-wise in contiguous memory. So if you traverse the array rows first you will traverse the data sequentially. That means that the next data point you need is likely to be in pipeline, cache, RAM, and the next hard drive sector before you need it. But if you traverse columns first then you will be repeatedly reading just one item from each row before reading from the next row. As a result your system's caching and lookahead will fail, and you will waste a lot of time waiting for RAM, which is about three to five times slower than cache. Even worse, you may wind up waiting for the hard drive, which can be millions of times slower than RAM if accessed in large random jumps.

How much time is wasted? On my machine summing a billion bytes row-wise takes 9 seconds, whereas summing them column-wise takes 51 seconds. The less sequential your data acccess, the slower your program will run.

Cache ping-pong is a more subtle form of thrashing that can happen when more than one CPU is using data that is cached on the same line. For example, say there are two CPUs using an array of structures:

struct flags {

bool pushed;

bool popped;

} states[N];

There is no conflict: One CPU touches only flags.pushed, and the other only flags.popped. But if these two elements are cached together then when the first CPU modifies a flags.pushed the cache coherence machinery will need to copy that cache line over to the second CPU's cache. Ping.

And when the second CPU modifies a flags.popped its cache line will have to be copied over to the first CPU's cache. Pong.

The answer is to lay out your data in memory so that the elements needed by each CPU are on separate cache lines. This may mean breaking up logical structures and spreading their elements across multiple arrays, in a pattern reminiscent of old-school FORTRAN:

struct flags {

bool pushed[N];

char pad[LINE_SIZE];

bool popped[N];

} states;

Crashing

"You don't come back from Dead Man's Curve." -- Jan and Dean

Consider:

*p = x

Easy enough. But what if p==0 or some other invalid value? If you are lucky your program just stops when the virtual memory hardware traps the illegal access. If you are unlucky your program or system state is corrupted. If you are very unlucky a malicious person or program will take advantage of the corrupted state to infect your system, or even worse your customers' systems.

So at best your program is doing nothing, and at worst your customers' systems are hijacked as porn and warez servers. Either way, no progress is slow progress.

The easiest way to avoid this is to avoid languages with undefined behavior, but that can mean non-trivial costs in runtime and memory, as we will see below. And even safe languages can have bugs in their implementations, as we noted above. Failing that, treat raw pointers and other opportunities for undefined behavior like infectious diseases, to be quarantined and tested for the safety of the program.

Indirection

"All problems in computer science can be solved by another level of indirection, but that usually will create another problem." -- David Wheeler, inventor of the subroutine

As I write this I'm running an awk program in the background. My awk code is being interpreted by the gawk program, which is written in C. The interpreter is doing a lot of string manipulation where a C program would use ints, and is otherwise using up a lot of real machine resources emulating the awk virtual machine, and when not chewing up a CPU that way it's mostly waiting for the read() or write() BSD system calls:

read

readv

dofileread

fo_ops->fo_read

vn_read

VOP_READ

VOP_READ_APV

vop->vop_read

ffs_read

Source: BSD System Calls

The BSD system calls go through several layers of indirection before getting to the file system interfaces, and those interfaces call through more layers on the way to the hard drive and network interfaces, and those interfaces entail more layers of software, firmware and hardware, ultimately representing bits as modulated light or electrical impulses, chemically altered film on a spinning plastic disc, magnetized atoms on a spinning metallic disk, or in a number of other ways.

So probably some fifteen, twenty, thirty layers in all from the awk interpreter down to the harvesting of the bits on the media.

Do these layers hurt performance? It depends. The layers of a file system are designed to provide as direct a mapping as possible from high-level subroutines down to the hardware. And since hard disks are astronomically slower than CPUs, the minimal overhead of the layers shouldn't matter much anyway.

In cases like an awk interpreter, where the mapping is more complex, the resulting impedance mismatch can make it difficult to reason about performance, and difficult to do even simple things without excessive overhead. And in cases where the lowest layer is fast relative to the highest, the layers themselves can take a significant proportion of time.

For example, the C++ code above for summing a square array, when translated to awk, sums a billion bytes 1,800 times slower and using 64 times as much space. Yes, that bad.

Why? Because gawk doesn't provide an integer-indexed array with contiguously-stored elements. Instead it provides a string- indexed associative array, implemented as a hash table, so that array lookup requires conversions from integer to string, a string hash and table lookup, and conversions from string back to integer. Plus, the hashing means that sequentially indexed elements are scattered all over the table. Also, being an interpreter, gawk suffers the overhead of emulating its virtual machine on a real one. So the impedance mismatch between awk and the computer can be high, but I still use awk when the match between awk and the problems I'm solving is good enough to be worth the slow performance.

Conclusion

"In theory there is no difference between theory and practice. In practice there is."

-- Yogi Berra

-- Jan L. A. van de Snepscheut

-- Albert Einstein

Computation is a physical process. We are not proving theorems, we are building machines. We specify our machines in more-or-less formally-specified languages. Our languages contain a mix of more-or-less compromised imitations of a handful of logical and mathematical concepts, chosen for their similarity to the operations of the lower-level machines on which the language rests, and for their suitability for building higher-level machines. These layers of more-or-less virtual machines ultimately rest on real hardware, with finite limits on its resources. To avoid going slow we must respect these limits. We do that by understanding the algorithmic structure of our programs and mapping that structure efficiently onto the stack of machines that runs those programs. I hope I have at least alerted you to some of the ways of going wrong in that task.

PS: Before trying to install those tools again I figured I better uninstall. The uninstall proceeded to chew up a CPU for an hour without making progress until I killed it, claiming it still had 27 items left to delete. Guess I'll live with the litter, and do without those tools.

References

Intel(R) Core(TM)2 Duo Processor -- Specifications

http://www.intel.com/products/processor/core2duo/specifications.htm

JEDEC DDR2 SDRAM SPECIFICATION

http://www.jedec.org/download/search/JESD79-2C.pdf

Hitachi Travelstar(R) 7K100 2.5-INCH HARD DISK DRIVES

http://www.hitachigst.com/tech/techlib.nsf/techdocs/50C8DBC2A315A4C786256F400065B756/$file/7K100_SATA_FINAL_DS.pdf

Josuttis, Nicolai. The C++ Standard Library. Addison-Wesley 1999

http://www.josuttis.com/libbook/

Becker, Pete. The C++ Standard Library Extensions. Addison-Wesley 2006

http://safari.awprofessional.com/0321412990

Talk back!

Have an opinion? Readers have already posted 54 comments about this article. Why not add yours?

About the author

Dr. Greg Colvin has been hacking happily since 1972. He is a contributing member of the ANSI/ISO C++ standards committee (and chief designer of auto_ptr) and a founding member of Boost. If he told you what his current business is he would have to hire you. In his spare time he plays apocalyptic blues guitar in his Colorado, USA home studio and in various disreputable dives.

Artima provides consulting and training services to help you make the most of Scala, reactive

and functional programming, enterprise systems, big data, and testing.

2070 N Broadway Unit 305

Walnut Creek CA 94597

USA

(925) 918-1769 (Phone)